Lesson 1.2: Kubernetes Architecture

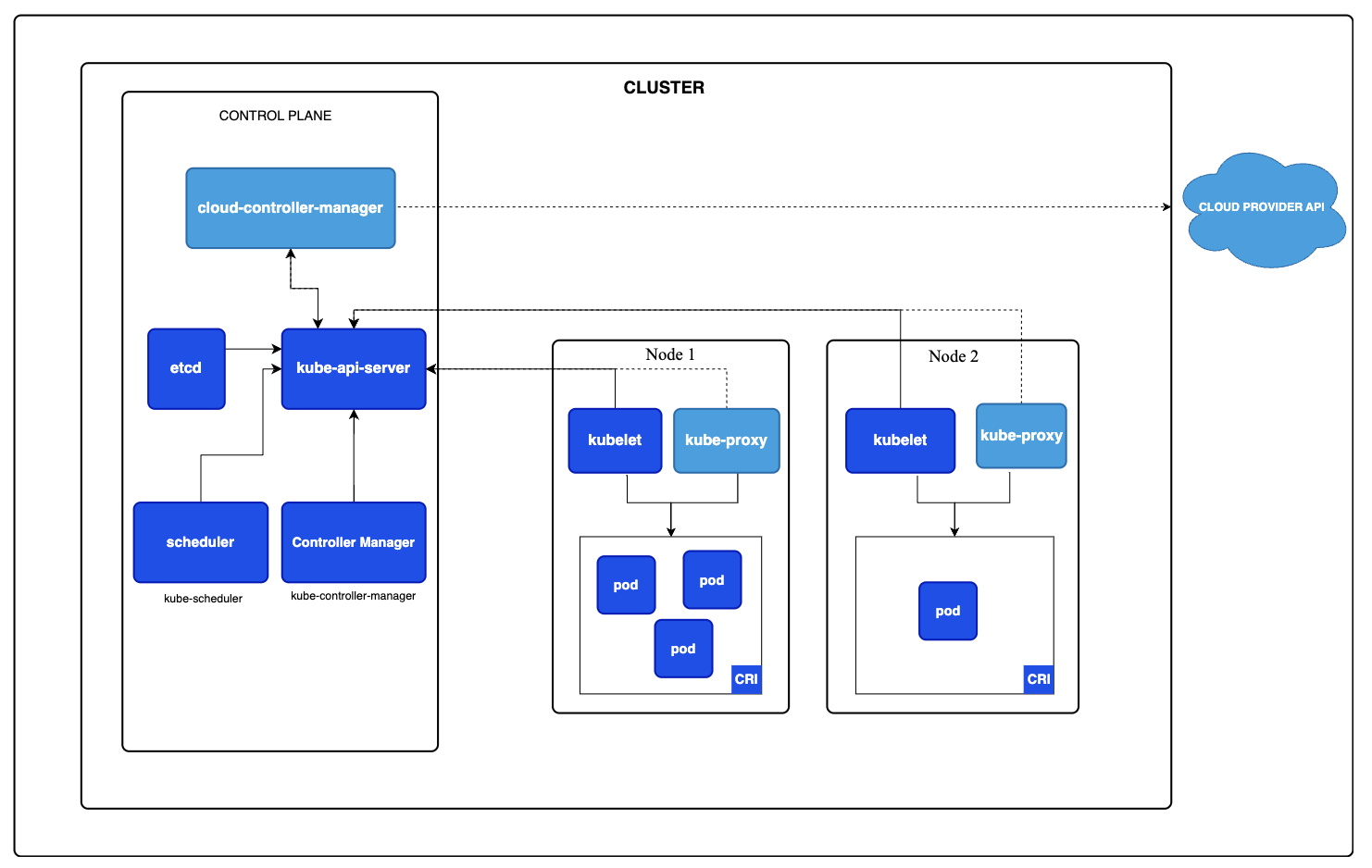

A Kubernetes cluster consists of a control plane plus a set of worker machines, called nodes, that run containerized applications. Every cluster needs at least one worker node in order to run Pods. By default, there is a single master node responsible for controlling the cluster, but multiple master nodes can be utilized to provide high availability [1]. Kubernetes runs its containers inside pods that are scheduled to the worker nodes. A pod can encapsulate one or multiple containers [2].

Figure 1. Kubernetes cluster components.

The master node is made up of different components including the kube-apiserver, kube-controller, kube-scheduler, and ectd

Components of the Kubernetes Control Plane

The Kubernetes control plane is responsible for managing the cluster, making global decisions, and responding to events. Below is an explanation of each control plane component:

-

kube-apiserver

- The kube-apiserver provides an entry point for the Kubernetes control plane to control the entire Kubernetes cluster. It receives all requests from the client and all other components in the cluster, authenticates them, and updates the corresponding objects in the Kubernetes’s database [1].

- Users interact with it via

kubectlor other clients. - Role: Exposes the Kubernetes API to users and other components.

- Scaling: Designed for horizontal scaling by deploying multiple instances with load balancing.

- Importance: Serves as the single communication hub for all Kubernetes operations.

-

kube-controller

- The kube-controller continuously watches the shared state of the cluster using

apiserverand tries to alter the current state to the desired state [1]. - Role: Controllers handle specific tasks such as:

- Node controller: Detects and reacts when nodes go down.

- Job controller: Creates pods for Jobs to complete one-off tasks.

- Endpoint Slice controller: Manages EndpointSlice objects for linking Services and Pods.

- Service Account controller: Creates default service accounts for namespaces.

- Design: Combines multiple controllers into one binary to simplify deployment.

- For example, it is responsible for noticing and responding when nodes go down or ensuring the correct number of running replicas for the application in the cluster [1].

- The kube-controller continuously watches the shared state of the cluster using

-

kube-scheduler

- The kube-scheduler monitors for unscheduled pods, assigns suitable nodes to these pods based on their resource requirements, and determines which node will execute a new pod by filtering and scoring feasible nodes.

- Decision Factors:

- Resource requirements (CPU, memory, etc.).

- Hardware/software/policy constraints.

- Affinity/anti-affinity rules.

- Data locality.

- Inter-workload interference.

- Deadlines.

- Importance: Ensures optimal placement of workloads across the cluster.

-

etcd

- The etcd is a distributed, consistent key-value store and is used to store all cluster data, including configuration data and the state of the cluster [1].

- Role: Stores all cluster state and configuration.

- Backup: Essential to have a backup plan as losing etcd data can result in a complete cluster failure.

- Use Case: Acts as the central repository for Kubernetes metadata.

-

cloud-controller-manager

- The cloud-controller-manager is a Kubernetes control plane component that embeds cloud-specific control logic, facilitating the integration of Kubernetes with cloud provider APIs while decoupling cloud-specific operations from cluster-specific functions;

- Role:

- Integrates Kubernetes with cloud provider APIs.

- Separates cloud-specific logic from cluster-specific logic.

- Controllers within Cloud-Controller-Manager:

- Node controller: Verifies node deletion in the cloud after it stops responding.

- Route controller: Sets up network routes in the cloud infrastructure.

- Service controller: Manages cloud provider load balancers.

- Usage: Not required for on-premises or standalone environments.

- Scaling: Can run multiple instances to improve performance and fault tolerance

Components of Kubernetes Node Architecture

The application is deployed in the worker nodes. Each worker node is managed by the master node and consists of three main components that include the kubelet, container runtime, and kube-proxy [1]. Node components are essential for running and maintaining pods and ensuring the Kubernetes runtime environment is operational on every node. Below is an explanation of the key components:

-

kubelet

- Kubelet is responsible for managing the containers running on the machine. It communicates with the master node to report current states of the worker node and obtain decisions from the master [1].

- Role:

- Ensures containers in pods specified by PodSpecs are running and healthy.

- Monitors the lifecycle of containers created by Kubernetes.

- Limitation: Does not manage containers that were not created by Kubernetes.

- Importance: Primary component for enforcing the desired state of pods on a node.

-

kube-proxy

- Function: A network proxy that facilitates Kubernetes Service networking. Kube-proxy runs on each node that implements the Kubernetes service. It maintains the network rules that allow communication to pods from inside or outside the cluster.

- Role:

- Implements network rules to allow communication between pods and external/internal entities.

- Uses the operating system’s packet filtering layer (if available) to manage traffic.

- Forwards traffic itself if the packet filtering layer is unavailable.

- Alternative: Can be replaced by network plugins that handle packet forwarding and provide similar functionality.

- Importance: Ensures reliable pod connectivity across the cluster.

-

Container Runtime

- Container runtime is used to run containers in pods. It is responsible for pulling the container image from a registry, unpacking the container, and running the container [1].

- Role:

- Executes containers as per Kubernetes requirements.

- Supports lifecycle operations like starting, stopping, and monitoring containers.

- Examples of Supported Runtimes:

- containerd: Lightweight runtime optimized for Kubernetes.

- CRI-O: A Kubernetes-native runtime for OCI-compliant containers.

- Importance: Fundamental to running containerized applications in a Kubernetes environment.

The Role of the Container Runtime in Worker Nodes

The container runtime is a critical component installed on every worker node. It is responsible for:

- Pulling container images from container registries (e.g., Docker Hub).

- Running containers based on instructions from the Kubelet.

- Managing container lifecycles, such as starting, stopping, or restarting containers as needed.

- Examples of Container Runtimes:

- Docker (deprecated in Kubernetes v1.20, replaced by CRI-compatible runtimes).

- containerd (most common runtime now).

- CRI-O (used in some Kubernetes distributions).

Interaction Between Kubernetes Components

Here's how the container runtime fits into the Kubernetes architecture:

- User Interaction:

- A user deploys an application via kubectl, defining a deployment or pod spec.

- Control Plane:

- The API Server receives the request and stores the spec in etcd.

- The Scheduler assigns the pod to a specific worker node based on available resources.

- The Controller Manager ensures the pod gets created.

- Worker Node:

- The Kubelet on the assigned worker node picks up the pod spec.

- The Kubelet instructs the container runtime to:

- Pull the specified container image (e.g., nginx) from a registry.

- Create and start the container based on the image.

- Attach networking and storage resources as needed.

- Pod and Containers:

- The container runtime isolates the container within the worker node, ensuring it has its own filesystem, processes, and network.

Key Points in the Architecture

- Worker Nodes Host Containers:

- The actual running containers (e.g., nginx) exist inside the worker nodes.

- The container runtime provides the abstraction that allows containers to run in isolated environments.

- Control Plane Doesn’t Run Containers:

- The control plane only manages the cluster and delegates the actual workload execution to worker nodes.

- Interaction Path:

-

User → API Server → Scheduler → Worker Node (Kubelet → Container Runtime) → Container.

-

References:

-

[1] N. Nguyen and T. Kim, "Toward Highly Scalable Load Balancing in Kubernetes Clusters," in IEEE Communications Magazine, vol. 58, no. 7, pp. 78-83, July 2020, doi: 10.1109/MCOM.001.1900660. URL: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9161999&isnumber=9161976

-

[2] Zhou, N., Georgiou, Y., Pospieszny, M. et al. Container orchestration on HPC systems through Kubernetes. J Cloud Comp 10, 16 (2021). https://doi.org/10.1186/s13677-021-00231-z