Bridging Textual and Tabular Data for Cross-Domain Text-to-SQL Semantic Parsing

View PDF: https://arxiv.org/abs/2012.12627

Challenges

The introduction of the paper "Bridging Textual and Tabular Data for Cross-Domain Text-to-SQL Semantic Parsing" sets the stage for the challenges faced in converting natural language questions into SQL queries, particularly in cross-domain scenarios. Here are the key points discussed in the introduction:

-

Cross-Domain Challenges: The introduction emphasizes the complexity of interpreting natural language questions that can relate to various database schemas. Each database may have different structures and field names, making it difficult for models to generalize across different domains.

-

Importance of Schema Understanding: It highlights that the interpretation of a question is heavily dependent on the underlying relational database schema. This means that a model must not only understand the question but also how it relates to the specific database it is querying.

-

Existing Limitations: The introduction points out that many existing models struggle with this task, particularly when faced with questions that have similar intents but require different SQL logical forms based on the database schema. This limitation is crucial as it affects the accuracy and reliability of the generated SQL queries.

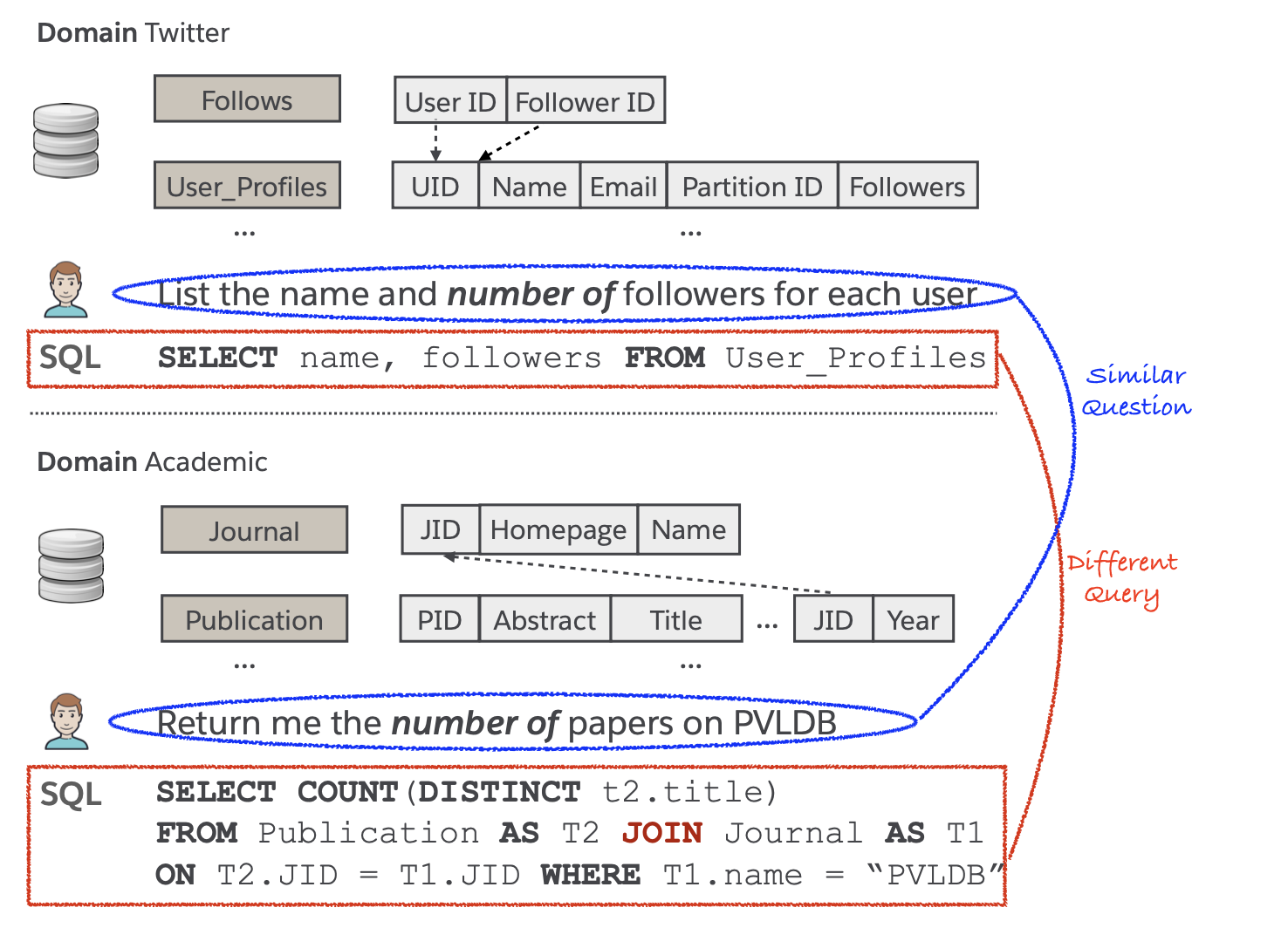

Figure 1 - Analysis

-

Key Insight: The main insight from Figure 1 is that even slight variations in the database schema can lead to significant differences in the SQL queries generated. This underscores the necessity for models like BRIDGE to effectively capture the relationship between the natural language input and the specific database schema being queried.

-

Implications for Model Design: The figure serves to illustrate the challenges that BRIDGE aims to address. By showing how different databases require different SQL interpretations of the same question, it reinforces the need for a model that can adapt to various schemas and understand the context of the question in relation to the database.

Paper's Introduction

The paper then introduces the BRIDGE model, which combines a BERT-based encoder with a sequential pointer-generator to perform end-to-end cross-DB text-to-SQL semantic parsing. The model is evaluated on two well-studied cross-database text-to-SQL benchmark datasets: Spider and WikiSQL. The paper demonstrates that the BRIDGE model achieves state-of-the-art performance on these benchmarks, highlighting its effectiveness in generalizing over natural language variations and accurately modeling the target database structure and context.

Problem Definition

- The paper formally defines the cross-DB text-to-SQL task as follows: Given a natural language question

Qand the schemaS=T,Cfor a relational database, the parser needs to generate the corresponding SQL queryY. - The schema S consists of tables

T={ t1 , ... , tN }and fieldsC={c11,...,c1|T1|,...,cn1,...,cN|TN|}. Here, ti represents each table, and ci represents each field within those tables. - The goal is to accurately model the natural language question Q, the target database structure S, and the contextualization of both, in order to generate the correct SQL query Y.

The model has access to the value set of each field instead of full DB

Question-Schema Serialization and Encoding with BERT

The purpose of Question-Schema Serialization and Encoding, especially in the context of text-to-SQL tasks, is to prepare the input data (natural language question and database schema) in a format that can be effectively processed by a neural model, such as BERT.

- Serialization:

- The natural language question is tokenized into a sequence of words or subwords.

- The database schema is represented as a structured format, including table names, column names, and possibly other metadata such as data types or primary/foreign key indicators.

- Special tokens are often added to distinguish different parts of the input, such as

[T]for table names,[C]for column names, and[V]for values mentioned in the question that appear in the database schema.

- Encoding with BERT:

- The serialized question and schema are concatenated and fed into a BERT model.

- BERT is a transformer-based pre-trained language model that can generate contextual embeddings for each token in the input sequence.

- These embeddings capture the meaning and relationships within the input data, taking into account both the natural language question and the database schema.

- BERT's bidirectional encoding capability allows it to consider the context of each token from both left-to-right and right-to-left, providing a more comprehensive understanding of the input data.

- Additional Encoding Considerations:

- In some cases, additional meta-features of the database schema, such as whether a column is a primary key or a foreign key, may be encoded and concatenated with the token embeddings.

- Anchor text, which are values mentioned in the question that appear in the database schema, may also be used to enhance the encoding of the input data.

Why It's Important:

- Serialization and encoding with BERT are crucial steps for preparing the input data in a form that can be effectively processed by the neural model.

- They allow the model to understand the semantics of the natural language question and the structure of the database schema, enabling it to generate accurate SQL queries.

- By leveraging BERT's powerful contextual embedding capabilities, the model can better capture the relationships and dependencies between the question and the database schema, leading to improved performance in text-to-SQL tasks.

Bridging

- Bridging is a mechanism used to enhance the understanding of the schema and its dependencies with the question in NLP or database querying tasks.

- Modeling only table/field names and relations is not always sufficient to capture the full semantics of the schema and its relationship to the question.

- Anchor text is used to link value mentions in the question with corresponding database fields.

- A fuzzy string match is performed between the question and the picklist of each field in the database to find anchor texts.

- Matched field values (anchor texts) are inserted into the question-schema representation, succeeding the corresponding field names and separated by a special token.

- If multiple values are matched for one field, they are concatenated in the order they were matched.

- If a question mention is matched with values in multiple fields, all matches are added to the question-schema representation.

- Anchor texts provide additional lexical clues for the model to identify the corresponding mention in the question.

- Bridging helps to bridge the gap between the question and the schema, enabling the model to better understand the context and intent of the question.

Decoder

Decoder component of a model architecture that is designed to handle natural language querying tasks, such as converting a natural language question into a structured query that can be executed on a database.

-

Purpose: The Decoder's purpose is to generate the final SQL query based on the processed input question and the enhanced question-schema representation.

-

Architecture: The Decoder is typically implemented as a sequence-to-sequence (Seq2Seq) model, which consists of an encoder and a decoder. However, in this context, the encoder is not explicitly discussed, as the focus is on the Decoder component.

Input: The Decoder takes as input the enhanced question-schema representation (which includes the original question, the schema information, and the anchor texts provided by the bridging mechanism).

Output: The Decoder generates the SQL query as a sequence of tokens. These tokens are typically selected from a predefined vocabulary that includes SQL keywords, table/field names, and special tokens (e.g., placeholders for values).

Attention Mechanism: The Decoder may incorporate an attention mechanism to focus on specific parts of the input sequence (the enhanced question-schema representation) while generating each token of the SQL query. This helps the Decoder to align the generated query with the relevant parts of the input.

Training: The Decoder is trained jointly with the other components of the model (e.g., the encoder, if present, and the bridging mechanism) using a suitable loss function that measures the difference between the generated SQL query and the ground truth SQL query.

Evaluation: The performance of the Decoder (and the overall model) is evaluated using metrics such as accuracy, precision, recall, and F1-score, which measure how well the generated SQL queries match the ground truth queries.

Design Principles of State-of-the-Art Cross-DB Text-to-SQL Semantic Parsers

State-of-the-art cross-database (cross-DB) text-to-SQL semantic parsers adopt several key design principles to effectively address the challenges associated with mapping natural language questions to executable SQL queries. These principles include:

-

Contextualized Question and Schema Representation: The first principle emphasizes the need for the question and database schema to be represented in a way that contextualizes them with each other. This means that the model should understand how the components of the question relate to the fields in the database schema, allowing for more accurate parsing and query generation

-

Utilization of Large-Scale Pre-trained Language Models: The second principle involves leveraging large-scale pre-trained language models (LMs) such as BERT and RoBERTa. These models significantly enhance parsing accuracy by providing better representations of text and capturing long-term dependencies within the input data. This capability is crucial for understanding complex natural language questions

-

Incorporation of Database Content for Ambiguity Resolution: Under data privacy constraints, the third principle suggests that utilizing available database content can help resolve ambiguities present in the database schema. By incorporating actual data values, the model can better interpret the question and generate more accurate SQL queries

-

Handling of Cross-DB Variability: The design principles also address the variability across different databases. Since the same natural language question can lead to different SQL queries depending on the database schema, the parsers must be capable of generalizing their understanding to work effectively across multiple databases. This requires sophisticated modeling of the relationships between the question and the schema.

BRIDGE Model

The BRIDGE model, as described in the paper, employs a series of innovative techniques to effectively parse natural language questions into SQL queries. Here’s a detailed breakdown of the model's components and functionalities:

-

Input Representation

- Tagged Sequence: The model begins by representing both the natural language question and the database schema in a single tagged sequence. This representation includes special tokens that denote different components, allowing the model to understand the relationships between the question and the database fields.

- Augmentation with Cell Values: A subset of the fields in the database schema is augmented with cell values mentioned in the question. This augmentation helps the model to focus on relevant data points, enhancing its ability to generate accurate SQL queries.

-

Encoding with BERT

- BERT Architecture: The hybrid sequence is encoded using BERT, a transformer-based model that excels in understanding context and relationships within text. BERT's ability to capture long-range dependencies is crucial for accurately interpreting complex natural language questions.

- Minimal Additional Layers: After encoding with BERT, the model uses minimal subsequent layers. This design choice contributes to the model's efficiency while maintaining high performance in generating SQL queries.

-

Attention Mechanism

- Deep Attention for Contextualization: BRIDGE utilizes a fine-tuned deep attention mechanism within BERT to contextualize the input. This allows the model to focus on the most relevant parts of the question and schema, effectively capturing the dependencies necessary for accurate SQL generation.

- Pointing Distribution: The model employs the attention weights from the last head of BERT to compute the pointing distribution, which is essential for generating SQL queries that may require copying values directly from the input question.

-

Pointer-Generator Decoder

- SQL Query Generation: The pointer-generator decoder is a key component of BRIDGE, enabling it to generate executable SQL queries. This decoder can copy values from the input question, which is a significant advantage over many existing models that do not utilize this capability.

- Schema-Consistency Driven Search Space Pruning: The model incorporates a pruning strategy that ensures the fields used in SQL clauses come only from the tables specified in the FROM clause. This approach reduces the search space for potential SQL queries, improving both efficiency and accuracy.

-

Performance and Evaluation

- State-of-the-Art Results: BRIDGE has achieved impressive performance on the Spider benchmark, with execution accuracy rates of 65.5% on the development set and 59.2% on the test set. This performance is notable given the model's simplicity compared to other recent models in the field.

- Generalization Potential: The architecture of BRIDGE is designed to effectively capture cross-modal dependencies, suggesting that it has the potential to generalize to other text-database related tasks beyond SQL query generation.